ABSTRACT

Project-Based Learning (PBL) has been lauded as a robust alternative to traditional, didactic instructional methods. Despite its growing adoption, comprehensive syntheses of its effect on student learning remain scarce. This study aims to fill this gap by systematically reviewing and meta-analyzing the impact of PBL on students’ academic performance across various disciplines. Employing the PRISMA guidelines, we evaluated 70 research articles published between 2010 and 2023 that quantitatively measured PBL educational outcomes. Our analysis, facilitated by advanced meta-analysis software, revealed a consistent, moderate to substantial enhancement in student performance under PBL compared to conventional teaching. The aggregate mean weighted effect size (d+) was 0.652, indicating a significant and positive influence of PBL on academic achievement. This effect persisted across different sample sizes and time frames within the study period. The findings underscore PBL’s superiority in fostering academic success, particularly in science subjects. Consequently, this article contributes to the scholarly discourse by delineating the specific conditions and magnitudes of PBL’s effectiveness and recommends its broader implementation in educational strategies. Based on the robust findings of this meta-analysis, the study strongly suggests education institutions integrate PBL, particularly in science education. Additionally, the study advocates for continuous, empirical research to refine and optimize PBL methodologies, ensuring their evolving effectiveness and adaptability in the dynamic landscape of educational pedagogies.

Keywords: Meta-Analysis, Academic achievement, Project-based learning, Effect size.

INTRODUCTION

Education is ever-evolving and continuously adapting to the demands and requirements of an increasingly globalized world (Djumanova & Makhmudov, 2020). One of the most vital aspects of education, especially in the 21st century, is fostering students’ ability to think critically, solve complex problems, and collaborate effectively (Suherman et al., 2021; Rehman et al., 2021a). In recent years, project-based learning (PBL) has emerged as a pedagogical approach that addresses these needs by engaging students in authentic, real-world tasks that require applying knowledge and skills across multiple domains (Boss & Krauss, 2007). As an instructional strategy, PBL emphasizes the collaborative learning process, providing students with opportunities to collaborate on projects to explore and solve real-world challenges and construct meaningful knowledge (Almulla, 2020). Rooted in the theories of constructivism and experiential learning, PBL shifts the focus of education from traditional teacher-centered, content-driven instruction to a more learner-centered, inquiry-driven approach (Rehman et al., 2021b). By allowing students to engage in authentic, interdisciplinary tasks, PBL fosters the development of essential skills such as critical thinking, problem-solving, creativity, and collaboration, widely recognized as crucial competencies for success in the modern workforce (Rees Lewis et al., 2019).

Over the past few decades, PBL has gained traction in educational circles across the globe, and numerous studies have been conducted to evaluate its effectiveness (Hawari & Noor, 2020). PBL has gained considerable attention as an innovative approach to education, emphasizing active learning and real-world application of knowledge. PBL involves students engaging in authentic, complex projects that require critical thinking, problem-solving, collaboration, and communication skills (Malik & Zhu, 2023). This pedagogical approach has been widely implemented in various educational settings, from primary schools to higher education institutions, to enhance students’ academic achievement and prepare them for the challenges of the 21st century (Randazzo et al., 2021; Rehman et al., 2021c).

The results of these studies have mainly been positive, with evidence suggesting that PBL can improve academic achievement, enhance motivation, and promote the development of higher-order thinking skills (Sari & Prasetyo, 2021). Despite the growing interest in PBL and its potential benefits, there remains a need for a comprehensive meta-analysis that examines the effectiveness of PBL on student achievement from 2010 to 2023. While some previous meta-analyses have focused on specific grade levels or subject areas, a comprehensive analysis spanning a broader range of educational contexts is lacking. By including studies conducted over 13 years, this meta-analysis aims to capture the most up-to-date research on the topic and provide a comprehensive understanding of the effectiveness of PBL.

While individual studies have explored the impact of PBL on student achievement, conducting a comprehensive meta-analysis allows for a systematic evaluation of the accumulated evidence to provide a more robust understanding of the effectiveness of PBL (Moallem, 2019). A meta-analysis synthesizes the findings from multiple primary studies, enabling researchers to identify patterns, trends, and overall effect sizes across various studies (Grewal et al., 2018). This approach provides a comprehensive assessment of the accumulated research and can help identify factors that may moderate the effectiveness of PBL.

This comprehensive meta-analysis seeks to synthesize the available research on the effectiveness of PBL in enhancing academic achievement globally (Chen & Yang, 2019). Examining studies that have investigated the impact of PBL on students’ performance also sheds light on the potential of this instructional approach to improve learning outcomes and contribute to developing a more skilled and innovative future workforce (Balemen & Keskin, 2018).

The present study offers a timely and significant contribution to the ongoing global educational reform and innovation conversation. This study aims to provide a comprehensive meta-analysis of the effectiveness of PBL in enhancing academic achievement in science education globally during 2010-2023. This article aims to inform policymakers, educators, and researchers about the potential benefits of this pedagogical approach and contribute to the development of effective strategies for cultivating a generation of students equipped with the knowledge, skills, and mindset necessary to thrive in the modern world.

LITERATURE REVIEW

PBL has been a subject of scholarly interest for decades, with its roots traceable back to key educational theorists like John Dewey, who advocated for learning by doing. Recent studies underscore PBL’s role in enhancing students’ critical thinking, problem-solving, and collaboration, positioning it as a crucial component of 21st-century education (Rehman et al., 2023).

EFFECTIVENESS OF PBL

PBL represents a significant shift in educational paradigms and is distinguished by its empirical substantiation across diverse learning environments (Rehman et al., 2023). This pedagogical approach has been rigorously scrutinized, revealing a compelling narrative of enhanced engagement and academic rigor (Shin, 2018). Its dynamic nature is central to PBL’s effectiveness, transforming students from passive recipients into active participants in the learning process (Zhang & Ma, 2023). This shift is not merely pedagogical but deeply cognitive, sparking an intrinsic motivation and a profound connection to the material (Kokotsaki et al., 2016). PBL has consistently demonstrated its ability to elevate academic achievement in various educational settings, from primary schools to universities (Condliffe, 2017).

It transcends traditional boundaries of learning, applying equally to sciences and humanities, mathematics, and arts. Empirical studies echo this sentiment, showing improved student grades and a deeper, more enduring understanding of complex subjects (Guo et al., 2020). This is attributable to PBL’s emphasis on real-world application, compelling students to apply theoretical knowledge to practical scenarios, reinforcing their learning, and making it more relevant (Dilekli, 2020).

Moreover, PBL is instrumental in cultivating essential 21st-century skills, including critical thinking and problem-solving (Loyens et al., 2023). In PBL, students engage with complex, real-world challenges and are compelled to analyze, synthesize, and innovate (Miller et al., 2021). This process is inherently collaborative, often in settings that mimic professional and communal environments (Yustina et al., 2020). Here, students refine their ability to navigate challenges, articulate solutions, and work cohesively, thereby honing skills invaluable in academic and real-life contexts (Pupik Dean et al., 2023). Additionally, PBL encourages a deep dive into content, promoting a cyclical learning process where concepts are not merely learned but experienced and re-experienced in varying contexts (Revelle et al., 2020). This iterative approach ensures a comprehensive and nuanced understanding, moving beyond rote memorization to a more sophisticated, integrated grasp of the material. Through repeated engagement and reflection, students develop a mastery that is broad in scope and deep in comprehension (An, 2023).

COMPARATIVE STUDIES AND META-ANALYSES

Meta-analyses serve as a powerful tool in educational research, aggregating findings from multiple studies to draw more robust conclusions (Meng, 2023). For instance, Groenewald et al. (2023) performed a comprehensive meta-analysis of PBL, illuminating its multifaceted benefits, and arguing that students involved in PBL enjoy a richer learning experience and demonstrate superior long-term retention of knowledge. This suggests that PBL is not just about learning for examinations; it is about fostering a deeper, more sustainable understanding of subject matter (Maia et al., 2023). Moreover, these studies reveal that PBL positively influences skill development, particularly in areas such as collaboration, communication, and self-directed learning, which are critical in today’s ever-changing world (Ananda et al., 2023). Finally, student satisfaction emerges as a prominent theme in these analyses, with learners preferring the engaging, relevant, and challenging nature of PBL tasks compared to more traditional, lecture-based approaches (Rehman et al., 2023).

While the benefits of PBL are well-documented, the literature highlights challenges in its implementation, including the need for significant teacher preparation, resource allocation, and adaptation of assessment strategies (Chen et al., 2021). Understanding these challenges is crucial for educators and policymakers to implement PBL effectively. Despite the extensive research, gaps remain, particularly in understanding how PBL can be effectively scaled and adapted across different cultural contexts or how it impacts diverse student populations (Gallagher & Savage, 2023).

THEORETICAL SUPPORT

The theoretical foundations of PBL are rooted in constructivist learning theories. PBL aligns with the principles of constructivism, which emphasizes the active construction of knowledge through meaningful experiences and social interactions (Perry, 2020). According to constructivist perspectives, students learn best when they are actively engaged in the learning process and when their learning experiences are situated in authentic and relevant contexts. PBL allows students to explore and construct their understanding of concepts and skills through hands-on projects, fostering deeper learning and long-term retention (Hassan & Ahmad, 2023). Additionally, PBL draws support from socio-cultural theory emphasizing the role of social interactions and collaborative learning in cognitive development (Leat, 2017). By working in groups, students engage in discussions, negotiate meaning, and share their perspectives, enhancing their understanding and learning outcomes. PBL also aligns with the principles of self-determination theory, as it promotes student autonomy, competence, and relatedness, fostering intrinsic motivation and engagement (Carriger, 2015). In the PBL environment, this journey is facilitated by engaging in problems and projects that are not merely academic exercises but mirror real-world challenges (Tuyen & Tien, 2021). This approach does not just fill students’ minds with facts but empowers them with the cognitive tools to build their understanding and insight (Shpeizer, 2019).

David Kolb’s Experiential Learning Theory further enriches our understanding of PBL. According to Kolb, there is effective learning when a person progresses through a cycle of four stages: concrete experience, reflective observation, abstract conceptualization, and active experimentation (Devi & Thendral, 2023). PBL embodies this theory, offering a structured way for students to engage deeply with content by doing, reflecting, thinking, and then applying (Rees Lewis et al., 2019). It is about turning the classroom into a lab of curiosity, where theories and ideas are tested in the crucible of real-life application (Hussein, 2021). PBL insists on active engagement, not passive reception. Students in a PBL setup are like young scientists or detectives, piecing together knowledge through investigation, collaboration, and synthesis (Vasiliene-Vasiliauskiene et al., 2020). They are encouraged to probe deeply and not just seek answers but understand underlying principles and concepts (Hassan & Ahmad, 2023). This active process is coupled with reflection, an equally critical component where learners look back, analyze, and evaluate their learning journey, gaining insights and preparing to apply this knowledge in future scenarios (Almulla, 2020).

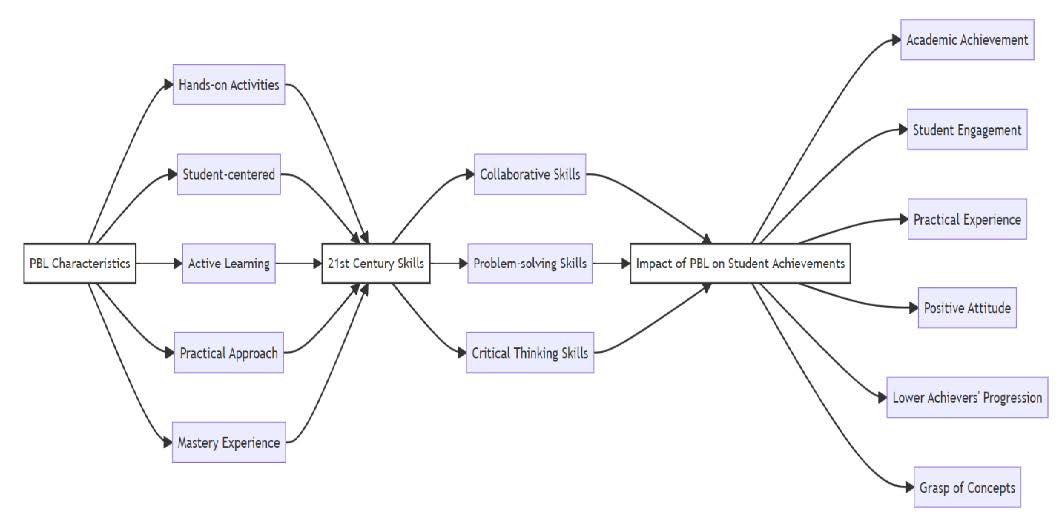

Figure 1

Characteristics of PBL.

PURPOSE OF THE STUDY

PBL is essential for enhancing students’ achievements and other skills in educational settings. Few studies have systematically analyzed the characteristics of PBL in different fields of study and its impact on learning outcomes. Previous reviews of PBL have included systematic/content reviews (Ananda et al., 2023; Darmuki et al., 2023; Paryanto et al., 2023; Yustina et al., 2020) and one meta-analysis (Suyantiningsih et al., 2023). Our article focuses on PBL research between 2010 and 2023 to reveal that PBL significantly enhances students’ academic performance relative to traditional instructional methods, displaying a medium to large positive effect. The review centers on examining students’ academic achievements in the scientific disciplines of science and technology, physics, chemistry, and biology by employing meta-analytical techniques to compare the effectiveness of the PBL approach against conventional education methods.

Further, we identify characteristics in the literature that may influence their claims on the efficacy of PBL, such as the number of publications per year, sample size, course of study, methodologies, and the nature of the publication medium used for disseminating findings. This article is thus an endeavor to contribute valuable insights to the discourse on pedagogical strategies in scientific education, by mainly focusing on the comparative advantages of the PBL approach. We focus on the following research questions, based on a review of the literature from 2010-2023:

1. How does PBL impact students’ academic achievements in science subjects?

2) Do the articles published from 2010-2023 significantly influence the effect sizes reported in the PBL literature?

3) Does the study’s sample size significantly influence the effect sizes of publications within the PBL literature?

The article moves forward with the following hypotheses:

H1: PBL significantly enhances students’ academic achievements in science subjects compared to traditional instructional methods.

H2: Research on PBL from 2010 to 2023 demonstrates a significant variance in the reported effect sizes.

H3: The sample size of studies significantly influences the reported effect sizes.

METHODS

The present study utilized a meta-analysis technique to assess the efficacy of PBL for teaching students’ science subjects. A meta-analysis comprehensively examines a body of literature as a research methodology, integrating and reinterpreting the outcomes of related distinct studies in a specific domain (Crowther et al., 2010). This technique distinguishes itself from other literature review methodologies by relying on statistical methods and quantitative data (Balduzzi et al., 2019). Meta-analysis has become particularly prevalent in social psychology, offering valuable insights for informing social policies (Leuschner et al., 2013). There are several variants of meta-analysis. We employ the “Study Effect Meta-Analysis” approach.

DATA COLLECTION

The researcher established specific criteria to select relevant studies for meta-analysis. These required that the studies employ a pre-test/post-test control group design, assess PBL’s impact on academic performance, and report relevant statistical data, including mean scores, standard deviation and sample size. Studies were accessed through CNK databases, education-related journals, congress, and symposium publications. Databases such as Science Direct, Web of Science, EBSCO, Google Scholar, ERIC and ProQuest were scoured using the keywords “PBL” and “student achievement”. Furthermore, we identified master’s and doctoral theses fitting the criteria. Specifically, our search focused on theses with English headlines and keywords such as PBL, academic achievement, experimental group, control group and mean scores. This process yielded a total of 70 studies.

Each study was individually reviewed to ensure it met the inclusion criteria, which are listed in detail in the following section. It employed a t-test (pre/post) between the experimental and control group design, measuring academic achievement by providing statistical data. Research articles that did not meet these criteria were excluded from the meta-analysis list, as outlined in Figure 2. Many studies were excluded for reasons such as not measuring academic performance, lacking standard deviation and arithmetic mean data, or not focusing on science as the discipline under investigation.

INCLUSION CRITERIA

1. Temporal Scope: Studies were conducted between 2010 and 2023, encompassing a global perspective.

2. Publication Language: Only research articles, master’s theses, and doctoral theses published in scientific journals and written in English.

3. Experimental Design: Studies followed an experimental design, allowing for a rigorous examination of the impact of PBL.

4. Experimental and Control Groups: Studies focused on applying PBL in the experiment group while maintaining a control group that followed traditional instructional methods.

5. Academic Achievement Measures: Studies measured students’ academic achievement and provided insights into performance within science classrooms, encapsulating pertinent details like arithmetic means and the dispersion measure for experimental as well as comparison groups.

6. Sample Information: Studies included detailed information regarding sample sizes and characteristics of study groups, allowing for a more accurate analysis of the impact of PBL on student achievement.

By employing these inclusion criteria, this meta-analysis aims to ensure a comprehensive and rigorous examination of the effectiveness of PBL on student achievement within the specified timeframe.

SCREEN PROCEDURE

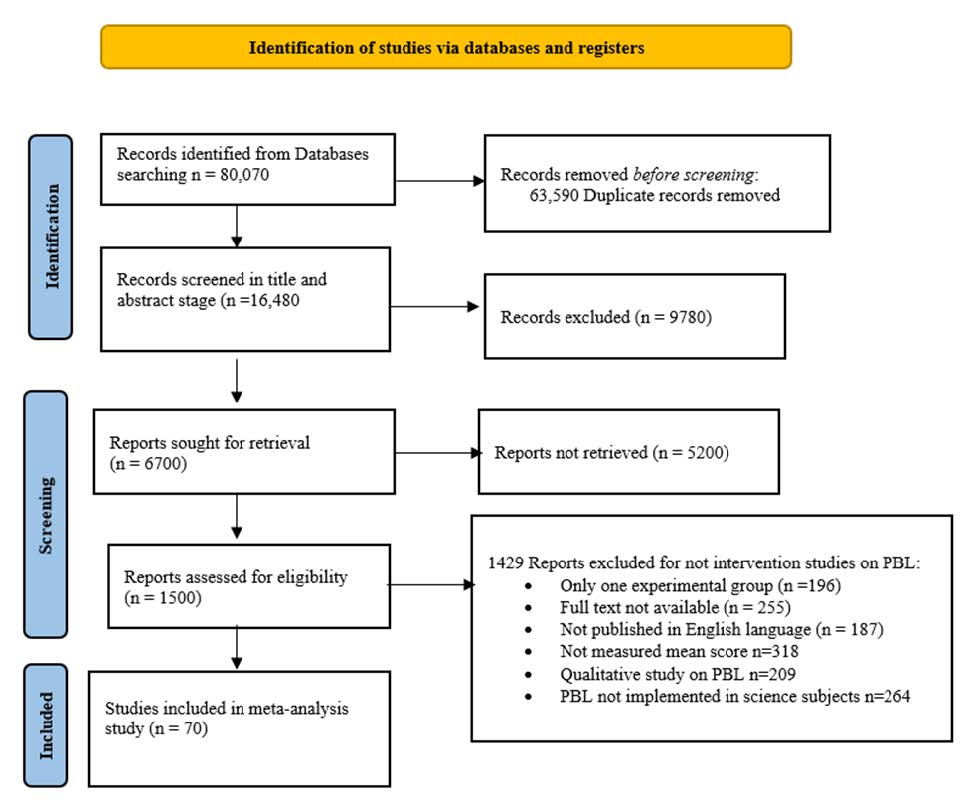

The PRISMA software package Covidence was used to screen the research articles, with the process of selection shown in Figure 2. A total of 80,070 studies were first identified before duplications were removed and criteria applied. A total of 63,590 duplicate records were removed. To arrive at the final number of 70 studies to be included, we followed these steps:

Initial Exploration: We searched through databases like Science Direct, Web of Science, and Google Scholar, using keywords like “PBL” and “student achievement.” This initial sweep brought in a whopping 80,070 studies, a testament to PBL interest.

First Filter: To avoid duplicates, the first step was to sift through these studies, removing repeats. This meticulous process, aided by Covidence software, helped us narrow down our catch to 63,590 unique studies.

Setting the Criteria: Our selection criteria were our compass. We sought studies from 2010 to 2023, focusing on those that employed a pre-test/post-test control group design and presented detailed statistical data. These criteria ensured we focused on recent, relevant, and methodologically sound studies.

The Final Stretch: After identifying 1,500 promising studies, we looked at their text in full, evaluating them against our stringent criteria. Language barriers, lack of comprehensive data, or deviation from PBL in science education were reasons for exclusion.

The Treasure Trove: Finally, after this exhaustive yet exhilarating journey, we arrived at our treasure: the final 70 studies matching our criteria.

Figure 2

PRISMA flowchart: inclusion of studies in the metanalysis program.

CODIFICATION OF DATA

Ensuring the reliability of coding is a critical aspect of conducting a meta-analysis. Therefore, all studies must be evaluated by a minimum of two experts (Weisburd et al., 2022). The researchers developed a codification form to determine the suitability of including studies in the meta-analysis and enable comparisons across different meta-analyses. This form encompasses various general characteristics of the studies, such as study name, author, study type, publication year, scale used, study duration, study location, educational level of students, statistical data, and effect size.

Two other researchers independently conducted the codifications to ensure the reliability of the study. Discrepancies between the codes assigned by the two researchers were carefully examined and resolved through consensus. The reliability of the study was determined to be 93 percent, using the reliability level formula proposed by Huberman & Miles (2002). According to Churchill & Peter (1984), reliability values of 70 percent or higher are considered sufficiently reliable. Therefore, based on this assessment, it can be confidently stated that the codifications used in this study are reliable. Any codes that did not match were diligently reviewed and rectified by the two researchers through mutual agreement.

DATA ANALYSIS

The present study utilizes meta-analysis focusing on transaction effects for data analysis. This approach, pioneered by Glass (1976), holds significant importance in educational practices, social sciences, and psychology research. The transaction effect meta-analysis encompasses the relationship between effects, the object’s nature, the transaction’s quantity, and factors influencing the effect. Standardized effect sizes, represented by Cohen’s d, are employed within this meta-analysis and determined by dividing the difference between the experimental and control groups by the combined standard deviation. This statistical method facilitates the comparison of effect sizes across multiple studies, transforming data into a common measurement system. Additionally, power analysis is conducted to assess the accuracy of the obtained effect sizes, utilizing the NORMSDIST formula function in Excel (Borenstein, 2022; Ellis, 2010; Üstün & Eryılmaz, 2014).

In a meta-analysis, the scales used in various studies may differ, leading to inconsistent values. Therefore, when conducting meta-analytic studies, the standardized mean differences in effect size, based on the presence or absence of the PBL approach, are employed as a suitable statistical method (Cohen, 1988; Huffcutt, 2002; Lipsey & Wilson, 2001; Schmidt & Hunter, 2015). This meta-analysis study comprises diverse research content, wherein effect sizes were calculated individually from different tests on distinct samples. The weights assigned to each study are determined as relative weights.

Meta-analysis studies are selected through a combination of qualitative and quantitative scrutiny of the research topic, minimizing quality concerns by only including dissertations and articles. As per the suggestions from specialists, primary research should not be assessed or disqualified solely on the grounds of a singular quality scale score for the meta-analysis (Mikolajewicz & Komarova, 2019; Üstün & Eryılmaz, 2014). Following this, it becomes imperative to amalgamate the statistical findings. Before determining the impact measures, the choice of statistical model depends on the Q statistics, as outlined by Hedges & Vevea (1998). This is done to gauge the uniformity of impact sizes and population samples in a meta-analysis. The two models under consideration are the fixed and random-effects models.

The premise of the fixed-effect model is that a singular, true effect size underpins each analysis. Any discrepancies in effect sizes across multiple studies are attributed to sampling errors (Hedges & Vevea, 1998). Conversely, the random effect model operates under the assumption that the average effect size can be derived from a collection of studies. Considering the potential for varying influential factors in each study’s outcomes, utilizing a random-effects model is fitting when these variances are significant.

Additional statistical measures, such as the I2 statistic, complement the Q statistic in assessing the heterogeneity of the studies’ results, providing a clearer indication of heterogeneity (Popay et al., 2006). I2 quantifies heterogeneity as low (25 percent), intermediate (50 percent), or high (75 percent) (Cooper et al., 2009, p. 263). The Orwin method, a tool that assesses the number of studies necessary to modify the importance of the outcomes relating to the effect sizes encompassed in the meta-analysis, was also employed (Christophorou et al., 2015). This method computes the quantity of studies where the mean effect size equals zero, thereby recognizing its practical significance and offering an avenue for researchers.

This detailed meta-analysis study compares the impacts of the PBL methodology and traditional instructional approaches. Within this context, the PBL method and customary educational practices are treated as independent variables, while students’ performance in science courses is the dependent variable. The Analog ANOVA was utilized to examine intervening variables like the number of publications and sample size. A variety of software tools were leveraged for data analysis, including comprehensive meta-analysis 2.0 (CMA), MetaWin, and Excel. CMA was key in determining the overall effect size, executing sub-group analysis, evaluating publication bias, and generating the forest plot and funnel plot graphs. Meanwhile, MetaWin supported the development of a standard quantile plot, and Excel played a pivotal role in power analysis.

RESULTS

GENERAL EFFECT SIZE RESULTS

The meta-analysis findings presented here compare and analyze the influence of the PBL approach with conventional instructional methods, focusing on their effect on students’ scholastic achievements in science disciplines. The first stage necessitates the selection of a suitable meta-analysis model by the researcher for determining the impact magnitude of the various studies. The researcher initially validates the uniformity of the studies through the application of a fixed-effect model. The findings concerning the studies’ consistency and the overall impact magnitude derived from the fixed-effect model are revealed in Table 1.

Table 1

Fixed-Effect Model (effect size). Showing Average Effect Size Value (ES), Degrees of Freedom (df), Homogeneity Value (Q), Standard Error (SE), and Confidence Interval (CI).

|

ES |

df |

Q |

Chi-Square Table Value |

SE |

I2 |

% 95 CI for ES (ES [% 95 CI]) |

|

|

0.400 |

47 |

180.70 |

|

0.0489 |

0.74 |

Minimum Value 0.304 |

Maximum Value 0.496 |

The homogeneity value of research studies using a fixed-effect model was found to be Q = 180.70. According to the chi-square table, compared to the critical value of 56.942 for 47 degrees of freedom at a 95% significance level, our Q statistic far exceeds it (for df = 47, χ² [0.95] = 56.942). In addition, the I2 statistic is 0.74, or 74 percent, indicating a high degree of heterogeneity. This result signifies that the study’s effect sizes diverge under the fixed-effect model, implying an absence of a singular, true effect size. Given the heterogeneity within the fixed-effect model, using a random-effects model may help mitigate potential inaccuracies. This model considers variation across studies, which may provide a more accurate representation of the overall ES in the presence of heterogeneity. Results regarding the homogeneity of the studies and general effect sizes, by using the random effect model, are given in Table 2.

Table 2

The results of studies’ ES based on random effect model.

|

ES |

n |

SE |

Z |

P |

% 95 CI for ES (ES [% 95 CI]) |

|||

|

|

|

|

|

|

Minimum Value |

Maximum Value |

|

|

|

0.652 |

70 |

0. 3568 |

1.829 |

0.00 |

-0.47 1.352 |

|

||

In examining the effect size of studies under a random-effects model, we found the ES to be 0.652. In assessing the magnitude of the observed effects, effect sizes were categorized using benchmarks established by Cohen et al. (2017), where a value of d = 0.2 is considered a small effect, d = 0.5 a medium effect, and d = 0.8 a large effect. This value indicates a considerable effect in the context of PBL across the studies examined. The number of studies included in this analysis is represented by ‘n’, which is 70. This figure significantly contributes to the robustness of the meta-analysis findings. In this case, the SE, which is 0.3568, suggests a relatively large degree of statistical uncertainty around the effect size estimate. The Z statistic is 1.829, and when accompanied by the p-value of 0.00, it indicates that the observed effect is statistically significant at the given level of significance, confirming that the PBL intervention has a measurable impact. The 95 percent CI for the ES stretches from -0.47 to 1.352. This interval tells us that we can be 95 percent confident that the true ES in the population falls within this range. Interestingly, this interval crosses zero, indicating that some studies might show negative, positive, and no effects. This could reflect a high degree of variability in the effects across studies, which is typical in a random-effects model where there is expected heterogeneity. The inclusion of a negative value, -0.47, might suggest that, under certain conditions or in specific contexts, PBL could have counterproductive outcomes or even detract from the desired educational objectives. Conversely, the positive upper limit of 1.352 points toward studies where PBL has had a significantly favorable impact. The span across zero further underscores the variability, suggesting that while most studies indicate a positive effect, others may show negative, neutral, or no significant results. This variation aligns with the inherent heterogeneity expected in a random-effects model and emphasizes the nuanced outcomes of PBL across different studies and contexts.

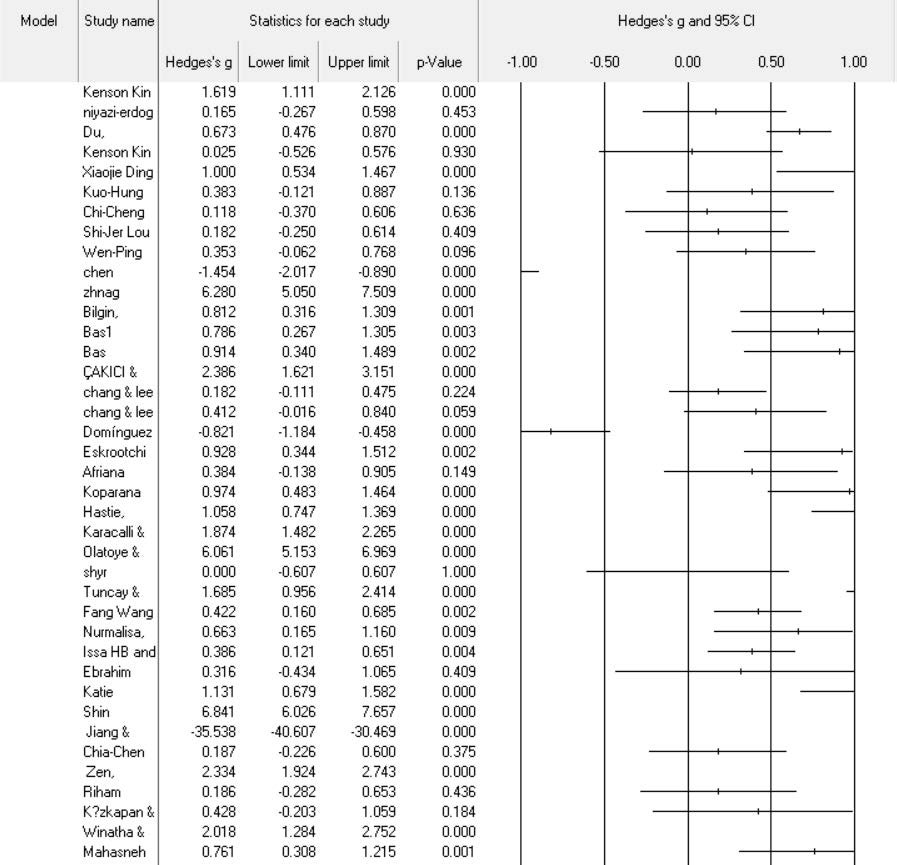

Given these findings, the results indicate considerable heterogeneity in the effects of PBL across the 70 studies included in the meta-analysis. This suggests that the specific context of each study significantly influences the effectiveness of PBL, underscoring the importance of considering the unique characteristics and qualities of individual studies when interpreting these results. A forest plot, illustrating the dispersion of primary studies’ ES values, as established by the random effect model, is presented in figure 3.

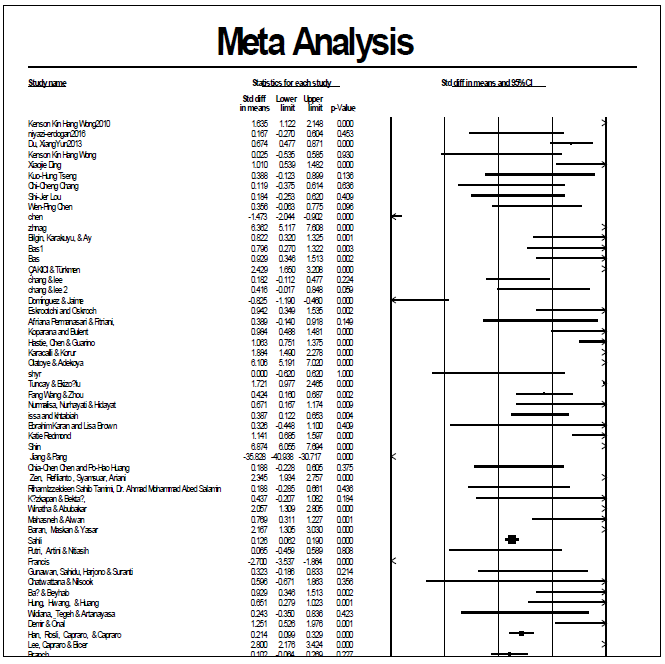

Figure 3

Forest plot showing distribution ES values of studies.

Squares in the plot represent each study’s ES, with the horizontal lines diverging from these squares signifying the minimum and maximum range of the 95 percent CI for the corresponding impact magnitude. The size of the square itself shows the weight that each study contributes to the overall ES. The diamond-shaped figure located at the bottom represents the overall ES of all studies.

Upon reviewing the effect sizes of the studies, it is apparent that the minimum effect size value is -9.35 and the maximum effect size is 2.39. Out of the 49 studies, 38 present a positive effect size, while eleven exhibit a negative effect size. This suggests that 38 studies show a positive effect favoring the experimental group using PBL, whereas eleven studies demonstrate a negative effect, favoring the control group using the traditional education method. The overall effect size is 0.40, with a SE of 0.05, and a 95 percent CI ranging from 0.30 to 0.50, indicating that the PBL approach seems to have a positive effect on average.

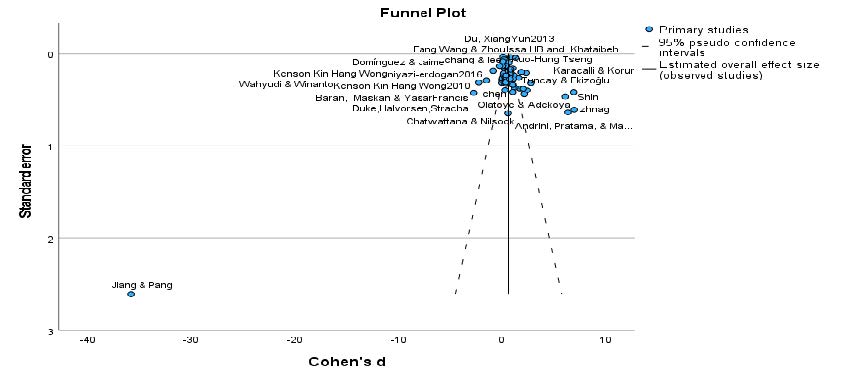

The effect size distribution of various studies generally follows a normal pattern, primarily because these studies are typically centered around the CI, demonstrated by the x=y line and apparent through the marked cut-off points. A graph illustrating the normal distribution of the effect sizes from the studies incorporated in this research is depicted in figure 4. In essence, the funnel plot illustrates the precision of individual studies (through their standard errors) against their effect sizes. A symmetrical distribution of the mean effect size suggests no publication bias, whereas any significant asymmetry might indicate potential bias.

Figure 4

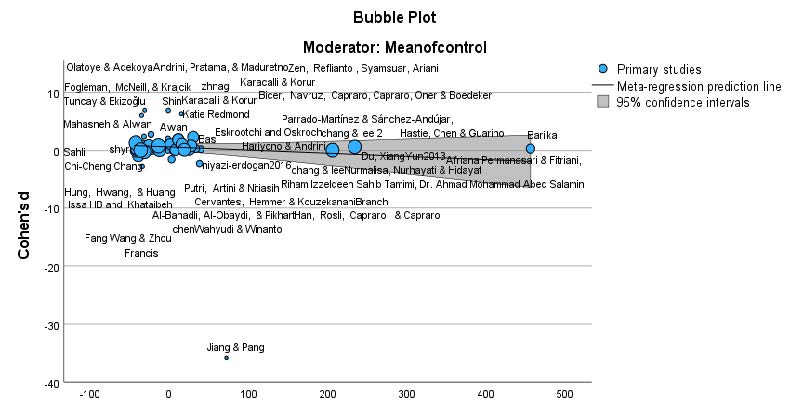

Effect size bubble plot.

Observing the bubble plot of the studies’ effect size, we can see that they closely align with the normal distribution line and do not cross the predefined boundaries. Thus, the studies incorporated into our research display a normal distribution. A funnel plot is commonly used in meta-analysis to visually examine the presence of publication bias or small-study effects. Additionally, the funnel plot provided in figure 5 can be utilized to decipher if publication bias is present. The cluster at the top with a fairly small range of effect sizes suggests low heterogeneity among larger studies. However, the presence of a study with a significantly different result (at the bottom) and a few larger studies with more extreme results may suggest potential publication bias or small-study effects. In other words, smaller studies (and potentially some larger ones) with more extreme results might be published more often, leading to overestimating the overall effect size in meta-analysis.

Figure 5

Funnel plot representing bias in the studies.

In publication bias cases, effect sizes are distributed in a pattern within the funnel plot. Conversely, these effect sizes are typically distributed symmetrically when there’s no publication bias. Interestingly, a perfect symmetry emerges when four studies are added to the left side of the funnel plot, as per the trim and fill method by Duval & Tweedie (2000). This observation serves as evidence of low publication bias. Additionally, the calculated adjusted mean effect size value stands at 0.819.

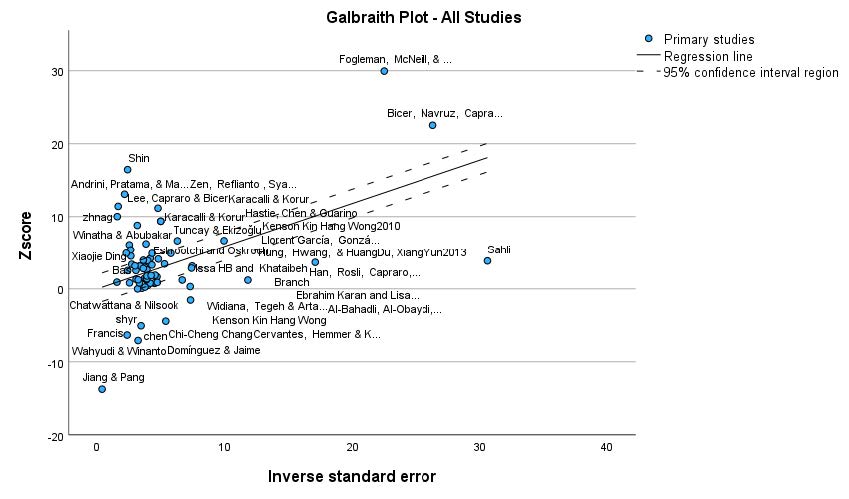

Figure 6

Galbraith plot.

RESULTS ABOUT STUDIES OCCURRED DURING THE YEAR 2010-2023

The result of whether the effect sizes differ according to the year of publication is given in terms of academic achievement in Table 3.

Table 3

Effect Size Differences According to the year of publication 2010-2023.

|

Variables Year publication |

Inter-Group Homogeneity value (QB) |

p |

n |

ES |

ES (% 95 CI) Min. Max. |

SE |

|

2010-2015 2016-2020 2021-2023 |

1977.16 |

0.0000 |

30 22 17 |

1.036 1.432 0.837 |

0.6182 1.0022 0.485 0.556 0.602 1.023 |

0.0980 0.018 0.107 |

Table 3 represents the range of years in which the studies were included in the meta-analysis. The p-value corresponding to QB is 0.0000. This is less than the typical significance level of 0.05, which means that there is a significant difference in effect sizes across the different years of publication within the range 2010-2023. The QB value is 1977.16, which shows a significant variation in the effect sizes of the studies conducted between 2010-2023, and the average effect size is between 0.6182 and 1.0022.

CORRELATION OF PBL EFFICACY WITH STUDY SAMPLE SIZE

The meta-analysis inclusion of numerous studies unveiled substantial variances in relation to the scale of the samples. Thus, the magnitude of the sample was regarded as a significant attribute of each study. As a result, studies were segmented into three distinct classifications determined by the volume of their samples: petite (n ≤ 50), intermediate (51 ≤ n ≤ 100), and expansive (n > 100). It was discovered that a considerable number of studies predominantly utilized an intermediate sample size (n = 42). When observing the productivity of PBL, the greatest efficacy was discovered in studies deploying petite-sized samples (d = 0.484). Investigations utilizing intermediate and expansive-sized samples exhibited effect sizes of d = 1.010 and d = 6.280 respectively. Statistical analyses did not suggest any significant disparities between the mean effect sizes arising from the diverse sample sizes (QB = 1815.81). The consistency within each group was determined as 96.444 for petite, 360.480 for intermediate, and 28.7609 for expansive samples. These findings hint at the fact that the impact of PBL remains relatively stable irrespective of the sample size, thus showcasing its wide-ranging effectiveness and applicability across diverse educational environments and scales (refer to Table 4 for more details).

Table 4

Impact of sample size on effect size differentiation.

|

Variables |

Inter-Group Homogeneity value (QB) |

Overall mean effect size d |

n |

D for (% 95 CI) Min. Max. |

Homogeneity within groups |

|

Sample size |

1815.81 |

|

|

|

|

|

Small Medium Large |

|

2.635 1.010 6.280 |

20 42 9 |

0.484 0.554 0.594 1.012 0.0574 0.0382 |

96.4440 360.480 28.7609 |

Figure 7

Effect size of each study with both models.

DISCUSSION

The present meta-analysis synthesized the results of 70 studies comparing the effects of PBL and traditional education methods on academic achievement in science classes. By employing transaction effect meta-analysis and using standardized effect sizes via Cohen’s d, this study aimed to capture the nuanced relationship between the teaching methods and their impact on student outcomes. Cohen (1980) observed a significant general impact. In essence, the performance of students who undergo PBL education surpasses that of their peers educated through traditional methods by 86.6%. A wealth of research on PBL in science education corroborates this, including studies by Bilgin et al. (2015); Çakici & Türkmen (2013); Chen & Yang (2019); Crespí et al., (2022); Darmuki et al. (2023); Maros et al., (2021); Schneider et al. (2022); and Kartika et al. (2022). The findings of these studies align with this meta-analysis, suggesting that PBL enhances students’ academic performance.

In the fixed-effect model, a high level of heterogeneity was observed (I2 = 74 percent), suggesting substantial variations in effect sizes across the studies. This led to the use of a random effect model, which accounts for this variability, and is typically used when heterogeneity is high. Based on the random effect model, the overall effect size was 0.652, signifying a moderate effect in favor of the PBL approach. The 95% CI, however, crossed zero, indicating that while the majority of studies reflected a positive impact of PBL on academic achievement, some studies reported no effect or a negative effect. This wide range of outcomes underscores the complexity of implementing PBL in diverse contexts and populations. Factors such as curriculum design, teacher training, student demographics, and class size could potentially influence the efficacy of PBL.

The funnel plot, alongside Duval & Tweedie’s trim (2000) and fill method, indicated low publication bias, thus boosting confidence in the robustness of the results. Although slight asymmetry was noted in the funnel plot, it is important to acknowledge that complete symmetry is rarely achieved in practical research scenarios. It was also observed that the effect sizes varied significantly based on the year of publication. Studies published between 2010-2023 showed a varying range of effect sizes (see Figure 6). This could be due to improvements in the implementation of PBL over time or the evolution of traditional educational practices. The current study, however, did not delve into the causes behind this variation, which provides scope for future research.

Further, when the sample size was taken into consideration, the impact of PBL was found to be fairly consistent, signifying its effectiveness across varying scales of educational settings. Though effect sizes did not significantly differ based on sample sizes, it is interesting to note that the smallest effect size was observed in the smallest sample size group. This might be attributed to the interactive, group-based nature of PBL, which perhaps resonates more effectively in larger classroom environments.

Meta-analyses also play a crucial role in integrating the results of independent studies. The key takeaways from such analyses include that PBL improves students’ academic outcomes. The publication status does not impact the eligibility of studies for the research criteria. There are no significant variances in the average influence of articles published from 2010 to 2023, with all types of publications demonstrating a substantial effect. Regardless of the sample size or whether it is implemented independently or in conjunction with other methods, PBL consistently proves its efficacy. Moreover, PBL consistently produces favorable outcomes in several different countries.

CONCLUSION

This meta-analysis concludes the effectiveness of PBL versus traditional education methods in science classrooms. The analysis of 70 studies was a quest for numbers and a deep dive into understanding how teaching methodologies shape student learning. Compared to traditional methods, PBL often enhances student performance in science education. PBL exhibited a significant edge based on Cohen’s d. This finding is echoed in several studies worldwide. However, it is not just a one-note narrative. This analysis reveals a high degree of heterogeneity that characterizes educational settings globally. The diversity of PBL implementation shows that it is not a one-size-fits-all solution. Moderate effect sizes and variability in results across studies highlight the complexity of education systems. Factors like curriculum design, teacher expertise, and student demographics emerge as critical players in this educational orchestra. Various effect sizes over the years suggest an evolving educational landscape where both PBL and traditional methods constantly refine their approaches. The low publication bias in our study is particularly encouraging. Although perfect symmetry in research is challenging, this lends credibility to our findings. Our analysis also suggests that PBL’s effectiveness does not waver significantly with the classroom size. It seems to hold its ground in intimate settings or larger educational arenas.

In conclusion, the findings suggest that PBL can positively impact academic achievement in science classes compared to traditional educational methods. However, this impact varies significantly across different studies, indicating the need for context-specific application and further research. Future studies could focus on identifying the factors contributing to the success of PBL in specific contexts and how these could be replicated in other settings. The potential impact of PBL on non-academic outcomes, such as student engagement and satisfaction, could also be explored.

LIMITATIONS

The present study has two limitations. First, heterogeneity among the studies included in the analysis was high, implying considerable variation in the methodologies, contexts, and populations of these studies. While the random effect model used in this analysis considers this, it means that conclusions drawn from this study should be applied with caution. Second, while efforts were made to reduce the risk of bias, it is impossible to eliminate this risk in a meta-analysis.

RECOMMENDATIONS

1. Given that the most significant effect value comes from the studies conducted during the years 2010-2015, which shows a considerable variation in the effect sizes of the studies conducted between 2010-2023, it would be sensible to deploy PBL more frequently at all levels.

2. Most PBL research appears to implement traditional, teacher-centered teaching methods in experimental groups without comparing PBL with other student-centered strategies. Future research should explore these comparisons.

3. Examining the broad traits of the investigations incorporated into the meta-analysis presented significant difficulties due to the lack of detailed data regarding the participating researchers. Critical details about the implementer’s educational background, professional experience, and whether they were also the researcher were often missing. When the implementer was different from the researcher, there was usually no mention of any preparatory training given. Similarly, implementation duration often went unreported. As crucial factors influence a study’s outcome, all future research should provide this information.

4. The resultant effect size calculated in our study, denoted as general = 0.652, serves as a standard for future investigations in PBL. Subsequent PBL research can measure the impact of their findings in comparison to this established effect size.

5. The effect size from the present study indicates that PBL is a highly effective method in science education, comparable to laboratory-supported teaching (d = 0.652 > 0.80). Therefore, evaluating the effectiveness of other student-centered teaching methods like Argumentation-Based Teaching, Collaborative Teaching, Computer-Aided Teaching, and Brain-Based Teaching in science education and comparing the results with this study would improve the quality of science education.

6. There appears to be a gap in national research, with no meta-analysis study evaluating the efficacy of PBL across various disciplines (such as math, English, art, music, etc.) identified. As such, conducting meta-analyses in diverse areas could potentially pinpoint the subjects where PBL demonstrates the most significant impact.

7. All research studies in the present PBL meta-analysis used different assessment tests to measure academic performance. However, in addition to the final results, the process in PBL should also be assessed and included in student evaluation. Hence, researchers should consider utilizing progress files and rubrics to evaluate the process in their PBL studies.

8. To sum up, it becomes evident that PBL manifests superior efficacy in contrast to conventional pedagogical approaches when applied to science instruction. Hence, PBL should be a standard tool in primary, secondary, and tertiary science education. This finding should influence curriculum development and lead to more PBL activities in textbooks.

DECLARATIONS

Data is available to be accessed with the author’s permission. The author(s) declared no potential conflicts of interest concerning this article’s research, authorship, and publication.

REFERENCES

Almulla, M. A. (2020). The Effectiveness of the Project-Based Learning (PBL) Approach as a Way to Engage Students in Learning. SAGE Open, 10(3), 2158244020938702. https://doi.org/10.1177/2158244020938702

An, Q. H. (2023). 8th Graders’ Attitude Toward the Implementation of Project-Based Learning Method in Teaching English Reading Skills: A Case Study at Public Secondary School in Ho Chi Minh City, Vietnam. International Journal of English Literature and Social Sciences, 8(3). https://dx.doi.org/10.22161/ijels.83.76

Ananda, F., Ramadina, A. R., Melianti, E. O., Jalinus, N., & Abdullah, R. (2023). Analysis of the Influence of Project-Based Learning Models on the Learning Outcomes of Vocational High School Students Using Correlation Meta-Analysis. Journal Pendidikan Dan Konseling (JPDK), 5(1), Article 1. https://doi.org/10.31004/jpdk.v5i1.11369

Balduzzi, S., Rücker, G., & Schwarzer, G. (2019). How to perform a meta-analysis with R: A practical tutorial. Evidence-Based Mental Health, 22(4), 153–160. https://doi.org/10.1136/ebmental-2019-300117

Balemen, N., & Keskin, M. Ö. (2018). The Effectiveness of Project-Based Learning on Science Education: A Meta-Analysis Search. International Online Journal of Education and Teaching, 5(4), 849–865.

Bilgin, I., Karakuyu, Y., & Ay, Y. (2015). The Effects of Project Based Learning on Undergraduate Students’ Achievement and Self-Efficacy Beliefs Towards Science Teaching. Eurasia Journal of Mathematics, Science and Technology Education, 11(3), 469–477. https://doi.org/10.12973/eurasia.2014.1015a

Borenstein, M. (2022). Comprehensive Meta-Analysis Software. In M. Egger, J. P. T. Higgins, & Davey Smith, G. (Eds.), Systematic Reviews in Health Research: Meta‐Analysis in Context (pp. 535–548). John Wiley & Sons, Ltd. https://doi.org/10.1002/9781119099369.ch27

Boss, S., & Krauss, J. (2007). Reinventing Project-Based Learning: Your Field Guide to Real-World Projects in the Digital Age. International Society for Technology in Education.

Çakici, Y., & Türkmen, N. (2013). An investigation of the effect of project-based learning approach on children’s achievement and attitude in science. TOJSAT, 3(2), 9-17.

Carriger, M. S. (2015). Problem-based learning and management development–Empirical and theoretical considerations. International Journal of Management Education, 13(3), 249–259. https://doi.org/10.1016/j.ijme.2015.07.003

Chen, C., & Yang, Y. (2019). Revisiting the effects of project-based learning on students’ academic achievement: A meta-analysis investigating moderators. Educational Research Review, 26, 71–81. https://doi.org/10.1016/j.edurev.2018.11.001

Chen, J., Kolmos, A., & Du, X. (2021). Forms of implementation and challenges of PBL in engineering education: A review of literature. European Journal of Engineering Education, 46(1), 90–115. https://doi.org/10.1080/03043797.2020.1718615

Christophorou, D., Funakoshi, N., Duny, Y., Valats, J., Bismuth, M., Pineton De Chambrun, G., Daures, J., & Blanc, P. (2015). Systematic review with meta-analysis: Infliximab and immunosuppressant therapy vs. infliximab alone for active ulcerative colitis. Alimentary Pharmacology & Therapeutics, 41(7), 603–612. https://doi.org/10.1111/apt.13102

Churchill, G. A., & Peter, J. P. (1984). Research Design Effects on the Reliability of Rating Scales: A Meta-Analysis. Journal of Marketing Research, 21, 360-375. https://doi.org/10.1177/002224378402100402

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Lawrence Erlbaum Associates.

Cohen, P. A. (1980). Effectiveness of student-rating feedback for improving college instruction: A meta-analysis of findings. Research in Higher Education, 13, 321-341. https://doi.org/10.1007/BF00976252

Cohen, L., Manion, L., & Morrison, K. (2017). Statistical significance, effect size and statistical power. In Research Methods in Education (pp. 739-752). Routledge.

Condliffe, B. (2017). Project-Based Learning: A Literature Review [Working paper]. MDRC. https://eric.ed.gov/?id=ED578933

Cooper, H., Hedges, L. V., & Valentine, J. C. (Eds.). (2009). The handbook of research synthesis and meta-analysis (2nd ed.). New York: Russell Sage Publication.

Crespí, P., García-Ramos, J. M., & Queiruga-Dios, M. (2022). Project-Based Learning (PBL) and Its Impact on the Development of Interpersonal Competences in Higher Education. Journal of New Approaches in Educational Research, 11(2), 259-276. https://doi.org/10.7821/naer.2022.7.993

Crowther, M., Lim, W., & Crowther, M. A. (2010). Systematic review and meta-analysis methodology. Blood, 116(17), 3140-3146. https://doi.org/10.1182/blood-2010-05-280883

Darmuki, A., Nugrahani, F., Fathurohman, I., Kanzunnudin, M., & Hidayati, N. (2023). The Impact of Inquiry Collaboration Project Based Learning Model of Indonesian Language Course Achievement. International Journal of Instruction, 16, 247–266. https://doi.org/10.29333/iji.2023.16215a

Devi, M. K., & Thendral, M. S. (2023). Using Kolb’s Experiential Learning Theory to Improve Student Learning in Theory Course. J. Eng. Educ. Transf, 37, 70-81.

Dilekli, Y. (2020). Project-Based Learning. In Ş. Orakcı (Ed.), Paradigm Shifts in 21st Century Teaching and Learning (pp. 53–68). IGI Global. https://doi.org/10.4018/978-1-7998-3146-4.ch004

Djumanova, B. O., & Makhmudov, K. S. (2020). Roles Of Teachers in Education of the 21st Century. Science and Education, 1(3), Article 3. https://openscience.uz/index.php/sciedu/article/view/284

Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463. https://doi.org/10.1111/j.0006-341x.2000.00455.x

Ellis, P. D. (2010). The Essential Guide to Effect Sizes: Statistical Power, Meta-Analysis and the Interpretation of Research Results. Cambridge University Press. https://doi.org/10.1017/CBO9780511761676

Gallagher, S. E., & Savage, T. (2023). Challenge-based learning in higher education: An exploratory literature review. Teaching in Higher Education, 28(6), 1135–1157. https://doi.org/10.1080/13562517.2020.1863354

Glass, G. V. (1976). Primary, Secondary, and Meta-Analysis of Research. Educational Researcher, 5(10), 3–8. https://doi.org/10.3102/0013189X005010003

Grewal, D., Puccinelli, N., & Monroe, K. B. (2018). Meta-analysis: Integrating accumulated knowledge. Journal of the Academy of Marketing Science, 46(1), 9–30. https://doi.org/10.1007/s11747-017-0570-5

Groenewald, E., Kilag, O. K., Unabia, R., Manubag, M., Zamora, M., & Repuela, D. (2023). The Dynamics of Problem-Based Learning: A Study on its Impact on Social Science Learning Outcomes and Student Interest. Excellencia: International Multi-Disciplinary Journal of Education, 1(6), Article 6. https://multijournals.org/index.php/excellencia-imje/article/view/184

Guo, P., Saab, N., Post, L. S., & Admiraal, W. (2020). A review of project-based learning in higher education: Student outcomes and measures. International Journal of Educational Research, 102, 101586. https://doi.org/10.1016/j.ijer.2020.101586

Hassan, A. M., & Ahmad, A. C (2023). A Conceptual Framework to Design and Develop Project Based Learning Instruments and Rubrics for Students with Autism in Learning English Language. International Research Journal of Education and Sciences, 7, 1-2023.

Hawari, A. D. M., & Noor, A. I. M. (2020). Project Based Learning Pedagogical Design in STEAM Art Education. Asian Journal of University Education, 16(3), 102–111.

Hedges, L. V., & Vevea, J. L. (1998). Fixed- and random-effects models in meta-analysis. Psychological Methods, 3(4), 486–504. https://doi.org/10.1037/1082-989X.3.4.486

Huberman, A. M., & Miles M. B. (2002). The Qualitative Researcher’s Companion. Sage Publications.

Huffcutt, A. I. (2002). Research perspectives on meta-analysis. In S. G. Rogelberg (Ed.), Handbook of Research Methods in Industrial and Organizational Psychology (pp. 198-215). Blackwell Publishers.

Hussein, B. (2021). Addressing Collaboration Challenges in Project-Based Learning: The Student’s Perspective. Education Sciences, 11(8), Article 8. https://doi.org/10.3390/educsci11080434

Kartika, R., Rahman, A., & Iswardi, I. (2022). The Effectivity and Efficiency of Project Based Learning in Achieving Student’s Expected Learning Outcome (A Case Study of Vocational School Students). Proceedings of the 4th International Conference on Educational Development and Quality Assurance (ICED-QA 2021). https://doi.org/10.2991/assehr.k.220303.040

Kokotsaki, D., Menzies, V., & Wiggins, A. (2016). Project-based learning: A review of the literature. Improving Schools, 19(3), 267–277. https://doi.org/10.1177/1365480216659733

Leat, D. (2017). Enquiry and Project Based Learning: Students, school and society through a socio-cultural lens. In D. Leat (Ed.), Enquiry and Project Based Learning: Students, School and Society (pp. 85-107). Routledge.

Leuschner, R., Rogers, D. S., & Charvet, F. F. (2013). A Meta-Analysis of Supply Chain Integration and Firm Performance. Journal of Supply Chain Management, 49(2), 34–57. https://doi.org/10.1111/jscm.12013

Lipsey, M. W., & Wilson, D. B. (2001). Practical Meta-analysis. Sage Publications.

Loyens, S. M. M., van Meerten, J. E., Schaap, L., & Wijnia, L. (2023). Situating Higher-Order, Critical, and Critical-Analytic Thinking in Problem- and Project-Based Learning Environments: A Systematic Review. Educational Psychology Review, 35(2), 39. https://doi.org/10.1007/s10648-023-09757-x

Malik, K. M., & Zhu, M. (2023). Do project-based learning, hands-on activities, and flipped teaching enhance students’ learning of introductory theoretical computing classes? Education and Information Technologies, 28(3), 3581–3604. https://doi.org/10.1007/s10639-022-11350-8

Maia, D., Andrade, R., Afonso, J., Costa, P., Valente, C., & Espregueira-Mendes, J. (2023). Academic Performance and Perceptions of Undergraduate Medical Students in Case-Based Learning Compared to Other Teaching Strategies: A Systematic Review with Meta-Analysis. Education Sciences, 13(3), Article 3. https://doi.org/10.3390/educsci13030238

Maros, M., Korenkova, M., Fila, M., Levicky, M., & Schoberova, M. (2021). Project-based learning and its effectiveness: Evidence from Slovakia. Interactive Learning Environments, 0(0), 1–9. https://doi.org/10.1080/10494820.2021.1954036

Meng, N., Yang, Y., Zhou, X., & Dong, Y. (2022). STEM Education in Mainland China. In M. M. H. Cheng, C. Buntting, & A. Jones (Eds.), Concepts and Practices of STEM Education in Asia (pp. 43–62). Springer Nature. https://doi.org/10.1007/978-981-19-2596-2_3

Mikolajewicz, N., & Komarova, S. V. (2019). Meta-Analytic Methodology for Basic Research: A Practical Guide. Frontiers in Physiology, 10, 203. https://doi.org/10.3389/fphys.2019.00203

Miller, E. C., Reigh, E., Berland, L., & Krajcik, J. (2021). Supporting Equity in Virtual Science Instruction Through Project-Based Learning: Opportunities and Challenges in the Era of COVID-19. Journal of Science Teacher Education, 32(6), 642–663. https://doi.org/10.1080/1046560X.2021.1873549

Moallem, M. (2019). Effects of PBL on Learning Outcomes, Knowledge Acquisition, and Higher‐Order Thinking Skills. In M. Moallem, W. Hung, & N. Dabbagh (Eds.), The Wiley Handbook of Problem-Based Learning (pp. 107–133). https://doi.org/10.1002/9781119173243.ch5

Paryanto, Munadi, S., Purnomo, W., Edy, Nugraha, R. N., Altandi, S. A., Pamungkas, A., & Cahyani, P. A. (2023). Implementation of project-based learning model to improve employability skills and student achievement. AIP Conference Proceedings, 2671(1), 040001. https://doi.org/10.1063/5.0116444

Perry, S. B. (2020). Project-Based Learning. In R. Kimmons & S. Caskurlu (Eds.), The Students’ Guide to Learning Design and Research. EdTech Books. https://edtechbooks.org/studentguide/project-based_learning

Popay, J., Roberts, H., Sowden, A., Petticrew, M., Arai, L., Rodgers, M., Britten, N., Roen, K., & Duffy, S. (2006). Guidance on the conduct of narrative synthesis in systematic reviews: A product from the ESRC Methods Programme. https://doi.org/10.13140/2.1.1018.4643

Pupik Dean, C. G., Grossman, P., Enumah, L., Herrmann, Z., & Kavanagh, S. S. (2023). Core practices for project-based learning: Learning from experienced practitioners in the United States. Teaching and Teacher Education, 133, 104275. https://doi.org/10.1016/j.tate.2023.104275

Randazzo, M., Priefer, R., & Khamis-Dakwar, R. (2021). Project-Based Learning and Traditional Online Teaching of Research Methods During COVID-19: An Investigation of Research Self-Efficacy and Student Satisfaction. Frontiers in Education, 6. https://www.frontiersin.org/articles/10.3389/feduc.2021.662850

Rees Lewis, D. G., Gerber, E. M., Carlson, S. E., & Easterday, M. W. (2019). Opportunities for educational innovations in authentic project-based learning: Understanding instructor perceived challenges to design for adoption. Educational Technology Research and Development, 67(4), 953–982. https://doi.org/10.1007/s11423-019-09673-4

Rehman, N., Zhang, W., & Iqbal, M. (2021a). The use of technology for online classes during the global pandemic: Challenges encountered by the schoolteachers in Pakistan. Liberal Arts and Social Sciences International Journal, 5(2), Article 2. https://doi.org/10.47264/idea.lassij/5.2.13

Rehman, N., Zhang, W., Khalid, M. S., Iqbal, M., & Mahmood, A. (2021b). Assessing the knowledge and attitude of elementary school students towards environmental issues in Rawalpindi. Present Environment & Sustainable Development, 15(1). https://doi.org/10.15551/pesd2021151001

Rehman, N., Zhang, W., Mahmood, A., & Alam, F. (2021c). Teaching physics with interactive computer simulation at secondary level. Cadernos de Educação Tecnologia e Sociedade, 14, 127. https://doi.org/10.14571/brajets.v14.n1.127-141

Rehman, N., Zhang, W., Mahmood, A., Fareed, M. Z., & Batool, S. (2023). Fostering twenty-first century skills among primary school students through math project-based learning. Humanities and Social Sciences Communications, 10(1), 1-12. https://doi.org/10.1057/s41599-023-01914-5

Revelle, K. Z., Wise, C. N., Duke, N. K., & Halvorsen, A.-L. (2020). Realizing the Promise of Project-Based Learning. The Reading Teacher, 73(6), 697–710. https://doi.org/10.1002/trtr.1874

Sari, D., & Prasetyo, Y. (2021). Project-based-learning on critical reading course to enhance critical thinking skills. Studies in English Language and Education, 8, 442–456. https://doi.org/10.24815/siele.v8i2.18407

Schmidt, F. L., & Hunter, J. E. (2015). Methods of Meta-Analysis: Correcting Error and Bias in Research Findings. SAGE Publications. https://doi.org/10.4135/9781483398105

Schneider, B., Krajcik, J., Lavonen, J., Salmela-Aro, K., Klager, C., Bradford, L., Chen, I.-C., Baker, Q., Touitou, I., Peek-Brown, D., Dezendorf, R. M., Maestrales, S., & Bartz, K. (2022). Improving Science Achievement—Is It Possible? Evaluating the Efficacy of a High School Chemistry and Physics Project-Based Learning Intervention. Educational Researcher, 51(2), 109–121. https://doi.org/10.3102/0013189X211067742

Shin, M. H. (2018). Effects of Project-Based Learning on Students’ Motivation and Self-Efficacy. English Teaching, 73(1), 95-114. https://doi.org/10.15858/engtea.73.1.201803.95

Suherman, Vidákovich, T., & Komarudin, K. (2021). STEM-E: Fostering mathematical creative thinking ability in the 21st Century. Journal of Physics: Conference Series, 1882, 012164. https://doi.org/10.1088/1742-6596/1882/1/012164

Shpeizer, R. (2019). Towards a successful integration of project-based learning in higher education: Challenges, technologies and methods of implementation. Universal Journal of Educational Research, 7(8), 1765-1771. https://doi.org/ 10.13189/ujer.2019.070815

Suyantiningsih, S., Badawi, B., Sumarno, S., Prihatmojo, A., Suprapto, I., & Munisah, E. (2023). Blended Project-Based Learning (BPjBL) on Students’ Achievement: A Meta-Analysis Study. International Journal of Instruction, 16, 1113–1126. https://doi.org/10.29333/iji.2023.16359a

Tuyen, L. V., & Tien, H. H. (2021). Integrating project-based learning into English for specific purposes classes at tertiary level: perceived challenges and benefits. VNU Journal of Foreign Studies, 37(4), Article 4. https://jfs.ulis.vnu.edu.vn/index.php/fs/article/view/4642

Üstün, U., & Eryilmaz, A. (2014). A Research Methodology to Conduct Effective Research Syntheses: Meta-Analysis. Ted Eğitim Ve Bilim, 39. https://doi.org/10.15390/EB.2014.3379

Vasiliene-Vasiliauskiene, V., Vasiliauskas, A. V., & Sabaityte, J. (2020). Peculiarities of Educational Challenges Implementing Project-Based Learning. World Journal on Educational Technology: Current Issues, 12(2), 136–149. https://eric.ed.gov/?id= EJ1272854

Weisburd, D., Wilson, D. B., Wooditch, A., & Britt, C. (2022). Meta-analysis. In D. Weisburd, D. B. Wilson, A. Wooditch, & C. Britt (Eds.), Advanced Statistics in Criminology and Criminal Justice (pp. 451–497). Springer International Publishing. https://doi.org/10.1007/978-3-030-67738-1_11

Yustina, Y., Syafii, W., & Vebrianto, R. (2020). The Effects of Blended Learning and Project-Based Learning on Pre-Service Biology Teachers’ Creative Thinking through Online Learning in the Covid-19 Pandemic. Journal Pendidikan IPA Indonesia, 9(3), Article 3. https://doi.org/10.15294/jpii.v9i3.24706

Zhang, L., & Ma, Y. (2023). A study of the impact of project-based learning on student learning effects: A meta-analysis study. Frontiers in Psychology, 14, 1202728. https://doi.org/10.3389/fpsyg.2023.1202728